The implications of ChatGPT for cyber security: What you need to know

Did you know that the launch of ChatGPT has come with a set of important security implications?

Open AI’s ChatGPT has been revolutionising the way many of us write, research and even code since it launched – but, as with any new technology, it comes with its own set of crucial considerations when it comes to cyber security.

Read on to understand more and discover some of my recommendations for using the platform safely and securely.

What is ChatGPT?

First of all, let’s investigate ChatGPT in a little more detail.

You might be forgiven for assuming the newest obsession in the corporate world is little more than your standard run-of-the-mill chatbot, but it’s a lot more than that.

ChatGPT stands for "Chat Generative Pre-trained Transformer," and it's a language model developed by OpenAI, one of the leading artificial intelligence research organisations in the world. It is a powerful technology that allows users to have conversations with a virtual assistant - think Siri or Alexa - but with even more advanced capabilities.

At its core, ChatGPT is designed to understand human language and generate responses that are similar to those that a human would provide. The technology is based on machine learning algorithms that have been trained on vast amounts of text data from the internet. This training process enables ChatGPT to understand the nuances of human language and generate responses that are contextually relevant and grammatically correct.

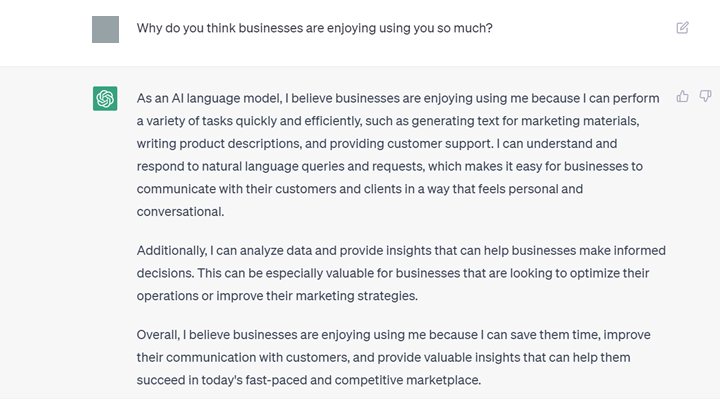

It’s easy to understand why businesses are embracing ChatGPT – just ask it!

Recently the launch of GPT-4, a more advanced version of ChatGPT, has catapulted the technology into greater notoriety and heavier use in the business world.

ChatGPT cyber security implications for businesses

Having already amassed so much power, ChatGPT will undeniably impact the world of cyber security. Here’s what you should consider.

Advanced language model creating super realistic phishing emails

Ever thwarted a phishing attempt after picking up some “phishy” broken English or nonsensical requests? These instances might be a thing of the past. ChatGPT is helping cybercriminals work smarter, not harder.

Phishing is a common form of cyber-attack where attackers send emails that appear to be from a legitimate source to trick users into revealing sensitive information. It’s so common that over 169,900 Australians reported a phishing scam in 2021-2022.

With ChatGPT, attackers could generate emails that look and sound just like the real thing, making it even harder for you to spot a scam and individuals more likely to be manipulated by social engineering attacks. This means you need to be extra cautious and aware when opening emails and clicking on links.

In response to this new threat, make sure your team brushes up on their Security Awareness Training and is familiar with other telltale signs of phishing like:

- A masked/unfamiliar sender's email address,

- Urgent, out of character requests; and,

- Any suspicious links.

Above and beyond these checks and balances, verifying the identity of the person making the request and being cautious about sharing sensitive information are other measures you can take to keep your business safe.

Uncertainty about the security of inserted data

With so many users employing ChatGPT to complete a range of tasks from customer service to producing legal documents and memos, there’s a whole lot of personal and sensitive data being entered into its depths.

ChatGPT needs a lot of data to learn and generate responses, and this data is often sourced from public datasets or the internet. However, you might not have considered that the data you put into the platform becomes part of ChatGPT’s database. Once inserted, your data can be used to further train the tool and be included in responses to other people’s prompts. This means your sensitive information could be compromised.

When using ChatGPT, it's important to only use data that you know is safe and to avoid using sensitive information like passwords or credit card numbers in prompts or responses. Additionally, you can use a virtual private network (VPN) or other security measures to protect your online activity.

As a best practice, ChatGPT recommends turn off the 'Chat History and Training' function to prevent OpenAI from using your data to train the model. To do this, click on the three dots on the bottom left of the ChatGPT window and select 'Settings'. Under Data Controls, you'll find a toggle switch labeled 'Chat History and Training'.

A guide for inserting data into ChatGPT

Insert

- Generic information for example generic prompts or questions like "What's the weather like today?" or "Can you recommend a good restaurant in the area?"

- Publicly available information such as news articles or historical data.

- Non-sensitive information like hobbies, interests, or opinions.

Don't insert

- Sensitive personal information such as your Tax File Number (TFN), credit card numbers, or passwords.

- Proprietary or confidential information like trade secrets, financial information, or business plans.

- Malicious prompts for example, to help generate phishing or social engineering attacks.

Barriers to maintaining compliance

One unexpected but serious implication of ChatGPT is that it could create a barrier to maintaining compliance for certain industries, such as finance, healthcare, and legal services.

As these industries are subject to strict regulations regarding the handling and protection of sensitive information, using ChatGPT as a tool for these businesses could raise concerns about the security and privacy of the information being processed.

Additionally, questions about the accuracy and reliability of the responses generated by ChatGPT could create legal and regulatory risks for businesses.

The bottom line? If you're operating in a heavily regulated industry, carefully consider the potential risks and benefits of using ChatGPT and take steps to ensure you’re able to maintain compliance with applicable regulations.

Best practices for using ChatGPT

My biggest pieces of advice to explore the safe and secure use of ChatGPT in your business are as follows:

- Conduct a risk assessment to identify potential security and privacy risks associated with using ChatGPT.

- Develop guidelines for the appropriate use of ChatGPT, including handling and protection of sensitive information.

- Ensure ChatGPT is properly configured and maintained to meet the requirements of applicable regulations.

- Provide training to employees and stakeholders on the appropriate use of ChatGPT and the risks and benefits associated with its use (we can do this for you).

- Users should turn off the 'Chat History and Training' function when using ChatGPT.

By taking these steps, businesses can leverage the benefits of ChatGPT while also ensuring that they are able to maintain compliance with applicable regulations and protect sensitive information.

Get up to speed with the experts

ChatGPT has significant implications for cyber security. It's important to be aware of the potential risks and take steps to protect ourselves. As technology continues to evolve, businesses will need to stay up to date with new developments and strategies to defend against emerging threats – and the advent of ChatGPT is no different.

Want to learn more about ChatGPT, its potential uses and what else to consider? Sign up for our webinar series or contact us to organise a training session for your organisation.

Author

AI is changing the way businesses operate, and as Head of AI & Automation at The Missing Link, I help organisations harness its full potential. With a background in commercial consulting and intelligent automation, I’ve guided companies in streamlining operations, reducing inefficiencies, and embracing AI-driven innovation. Before joining The Missing Link, I led an automation start-up to profitability and have since trained over 2,000 professionals in generative AI, including Microsoft Copilot and ChatGPT. I’ve also authored books on prompt engineering. When I’m not exploring AI’s capabilities, you’ll find me enjoying yoga, golf, or making my daughters laugh.